Thomas Otter on AI In HR tech and The Carbolic Smoke Ball legal case

Here is Thomas Otter’s latest UNLEASH column.

Why You Should Care

As buyers and users of software, you are bombarded with all sorts of claims and promises. Many of these claims and promises involve AI or ML.

But how do you identify what is real and useful, and what is useless or even dangerous?

Decisions you make about HR tech can have a powerful impact on people’s careers and livelihood.

Back in my youth, I studied law. I have forgotten most of what little I learnt, but one case stuck with me. I think of it often.

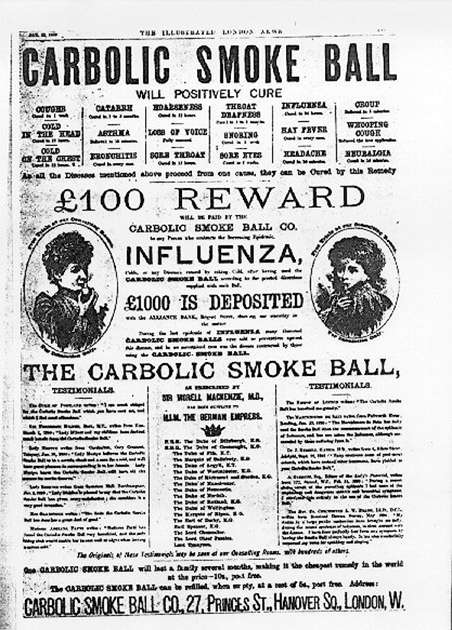

“Contract – Offer by Advertisement – Performance of Condition in Advertisement – Notification of Acceptance of Offer – Wager – Insurance – 8 9 Vict. c. 109 – 14 Geo. 3, c. 48, s. 2.

The defendants, the proprietors of a medical preparation called “The Carbolic Smoke Ball,” issued an advertisement in which they offered to pay £100 to any person who contracted influenza after having used one of their smoke balls in a specified manner and for a specified period.

The plaintiff, on the faith of the advertisement bought one of the balls, and used it in the manner and for the period specified, but nevertheless contracted the influenza.

Held, affirming the decision of Hawkins, J., that the above facts established a contract by the defendants to pay the plaintiff £100 in the event which had happened; that such contract was neither a contract by way of wagering within 8 9 Vict. c. 109, nor a policy within 14 Geo. 3, c. 48, s. 2; and that the plaintiff was entitled to recover.“

One supposes that this case could be seen as an early example of vapourware.

My point is: As buyers and users of software, you are bombarded with all sorts of claims and promises. Today, many of these claims and promises involve AI or ML.

Your challenging job is to navigate through the miasma of hype, and identify what is real and useful, and what is useless or even dangerous.

Recent academic research has highlighted a lot of issues with AI usage in HR tech.

Here is what you need to ask vendors:

- The greater the promise, the greater your diligence needs to be.

- Ask the vendor where they get the data from to build their models. How do they keep the data up to date? What is the provenance of the data?

- Ask the vendor how will your data be included in the dataset? What controls do you have over that process?

- Ask the vendor for a copy of their DPIA. Article 35 of the GDPR states: when a type of processing, in particular, using new technologies, and taking into account the nature, scope, context, and purposes of the processing, is likely to result in a high risk to the rights and freedoms of natural persons, the controller shall, prior to the processing, carry out an assessment of the impact of the envisaged processing operations on the protection of personal data.

While not all ML/AI in HR is high risk, a lot of it is. - By definition, machine learning involves the machine learning something. Ask them to explain how the machine learns, and give you some examples of how that learning has benefitted customers. If they say they have been running the ML for several years, they should be able to show how the results have got more accurate over time.

- Ask them to differentiate between predictive analytics and AI/ML. Much of what is called ML actually isn’t ML, it is analytics done with a bit more statistics than before.

- Ask them to define what they mean by algorithm.

- When vendors talk of automated decision-making, ask question four again.

- Does the model actually work? Is it reliable and valid? Where is the science?

- Many vendors will say they have an ethics council that makes sure that they do ethical AI. This could be a good thing, but ask them for an example of where the ethics council has stopped a product feature, or demanded its modification.

- Ethics is cool, but obeying laws is cooler. Question how they manage and mitigate bias, and create fairness and transparency. How does it cope with differing definitions of non-discrimination law for instance US EEO v UK Equality Act.

- Look at some real examples of how the tool works. Examine the results on either side of a decision. So if the product provides a long list of say 20 candidates, look carefully at the data of candidates 20 and 21. Why was one selected and the other not?

- Ask the vendor for their opinion on the new proposed EU AI regulations.

- If an applicant sues the employer because of an AI-driven decision from your product, what recourse do I have with the vendor whose product I’m using?

Remember HR technology is processing the data of humans.

Decisions you make about HR tech can have a powerful impact on people’s careers and livelihood.

When software vendors suggest that AI/ML is going to dramatically improve hiring, internal mobility and employee wellness, you need to ask the tough questions. Do your research.

Sign up to the UNLEASH Newsletter

Get the Editor’s picks of the week delivered straight to your inbox!

Founder & CEO

Thomas advises leading and emerging HRTECH vendors and their investors, guiding them to build better products.