HR, beware of AI’s ‘bias susceptibility’, says Rippl report

Job seekers may feel that AI makes hiring fairer, but how biased is AI in reality?? UNLEASH unpicks the results of employee benefits platform Rippl’s AI experiment with its CEO Chris Brown.

News In Brief

AI bias has long been a concern - and Rippl's recent AI experiment with AI proves that it needs to remain top of mind.

Rippl asked ChatGPT and Runway to generate a job description and image of a person in the UK with a certain job title, and the results showed that these AI models simply perpetuate gender, race and age stereotypes.

What's the solution? Rippl's CEO Chris Brown shares his thoughts with UNLEASH.

Ever since AI came onto the scene in the 1950s, there has been a lot of discussion about its potential to perpetuate bias and lead to unfair discrimination against certain groups.

This conversation has come even more to fore since OpenAI launched its generative AI model ChatGPT to the world in November 2022.

Everyone wants to make sure that AI is not biased, particularly in the world of work where there’s a real focus to make recruitment, progression and pay fairer.

While recent data from HireVue shows that employees and HR leaders aren’t too worried about AI bias, and actually think AI can improve bias and unfair treatment in hiring, is this feeling actually the reality?

Employee benefits platform Rippl decided to test this out – it prompted ChatGPT to create a biography of a range of job titles in the UK, and then used Runway to generate a visual of a person performing each role.

The results are shocking – and they reveal that AI does simply perpetuate damaging gender, race and age stereotypes in the workplace.

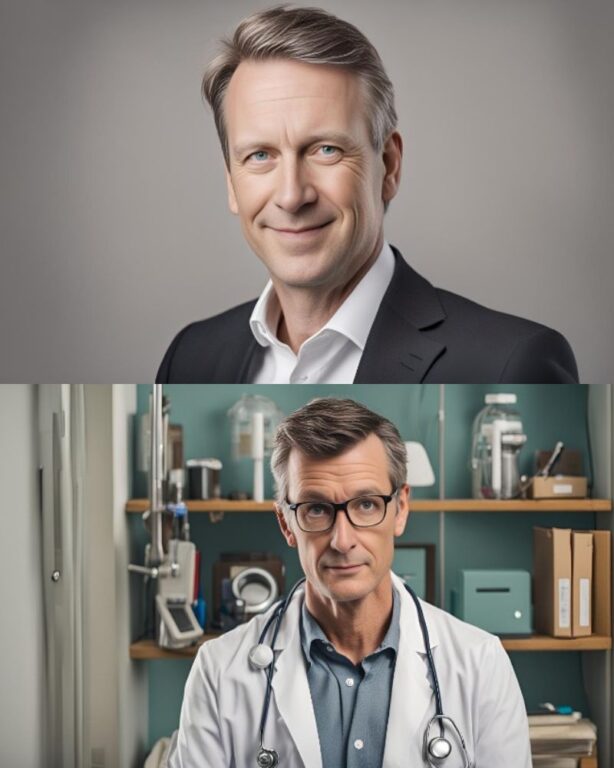

When prompted to produce an image and bio for a CEO, doctor and engineer, the AI models only generated men – ChatGPT and Runway failed to produce any bios or images of women for these roles.

AI generated images of a CEO (top) a Doctor (bottom). Credit: Rippl.

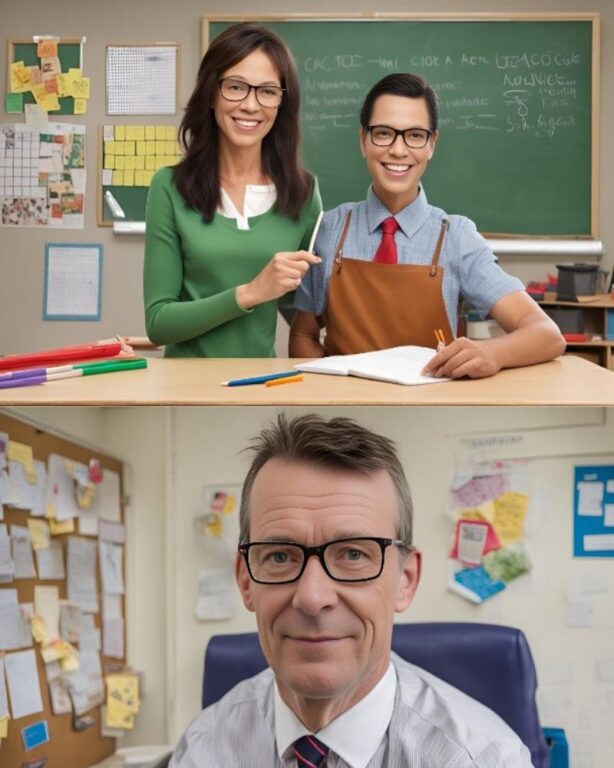

The AI also produced male-dominated results for Carpenter, Electrician, Manufacturing Worker and Salesperson, while images and bios of women were exclusively produced for Housekeeper, HR Manager, Marketing Assistant, Receptionist and Nurse.

Rippl’s experiment further found that for CEOs, the AI models produced images of middle-aged white men who went to top universities in the UK, whereas marketing assistants were exclusively young white women.

Plus, while the AI models identified that teachers could be both male and female – when it came to headteacher, the AI outputted only men.

AI generated images of Teachers (top) and a Headteacher (bottom). Credit: Rippl.

Speaking exclusively to UNLEASH, Rippl’s CEO Chris Brown shares: “Speaking from my own experience of integrating AI tools into daily business operations, I was certainly hoping for a more positive outlook when I commissioned the study.”

Brown adds: “With AI being a tool that is being adopted across countless industries and disciplines, this doesn’t paint a positive picture for the future, and in some cases could risk regression in the efforts made for diversity and inclusion.”

HR and fixing bias in HR

This clear AI “bias susceptibility”, as Rippl’s report terms the findings, “must remain a key consideration for business leaders” if the corporate world is going “to avoid this becoming even further widespread”.

The report calls for companies, and particularly HR leaders, to ensure their practices “remain human-led to ensure all talent is accurately represented, seen and heard, and celebrated for their contribution”.

The question that remains is precisely how HR leaders should do this?

Rippl’s Brown shares: “HR leaders need to ensure they are reviewing the AI systems they use, especially in recruitment and employee evaluation, to identify and mitigate any biases.

“This begins with education to ensure HR teams can identify potential problems before they happen. This means that human oversight will remain essential in the decision-making process.”

This echoes guidance in a recent UNLEASH OpEd from Harvard University’s Paola Cecchi-Dimeglio.

She calls for HR leaders to take the lead on AI bias because they are “the gatekeepers of talent and culture” – to do this, they need to keep compliance, trust, transparency, efficiency, fairness, human touch and reinforced learning top of mind.

At the end of the day, the aim is to “build AI systems that are not only efficient and innovative, but also fair and trustworthy, thereby fostering an inclusive and equitable workplace for all”.

Brown agrees, concluding that HR teams need “to continue to actively promote inclusivity within their practices and policies, so they can counter the stereotypes that sadly remain to be reinforced by AI today”.

Sign up to the UNLEASH Newsletter

Get the Editor’s picks of the week delivered straight to your inbox!

Chief Reporter

Allie is an award-winning business journalist and can be reached at alexandra@unleash.ai.